Deep ViT Features as Dense Visual Descriptors

| 1 Weizmann Institute of Science | 2 Berkeley Artificial Intelligence Research |

ECCVW 2022 "WIMF" Best Spotlight Presentation

| | Paper | Supplementary Material | Code | |

Abstract

We leverage deep features extracted from a pre-trained Vision Transformer (ViT) as dense visual descriptors. We demonstrate that such features, when extracted from a self-supervised ViT model (DINO-ViT), exhibit several striking properties: (i) the features encode powerful high level information at high spatial resolution--i.e., capture semantic object parts at fine spatial granularity, and (ii) the encoded semantic information is shared across related, yet different object categories (i.e. super-categories). These properties allow us to design powerful dense ViT descriptors that facilitate a variety of applications, including co-segmentation, part co-segmentation and correspondences -- all achieved by applying lightweight methodologies to deep ViT features (e.g., binning / clustering). We take these applications further to the realm of inter-class tasks -- demonstrating how objects from related categories can be commonly segmented into semantic parts, under significant pose and appearance changes. Our methods, extensively evaluated qualitatively and quantitatively, achieve state-of-the-art part co-segmentation results, and competitive results with recent supervised methods trained specifically for co-segmentation and correspondences.

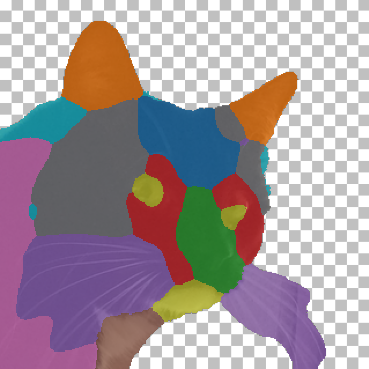

PCA Visualization

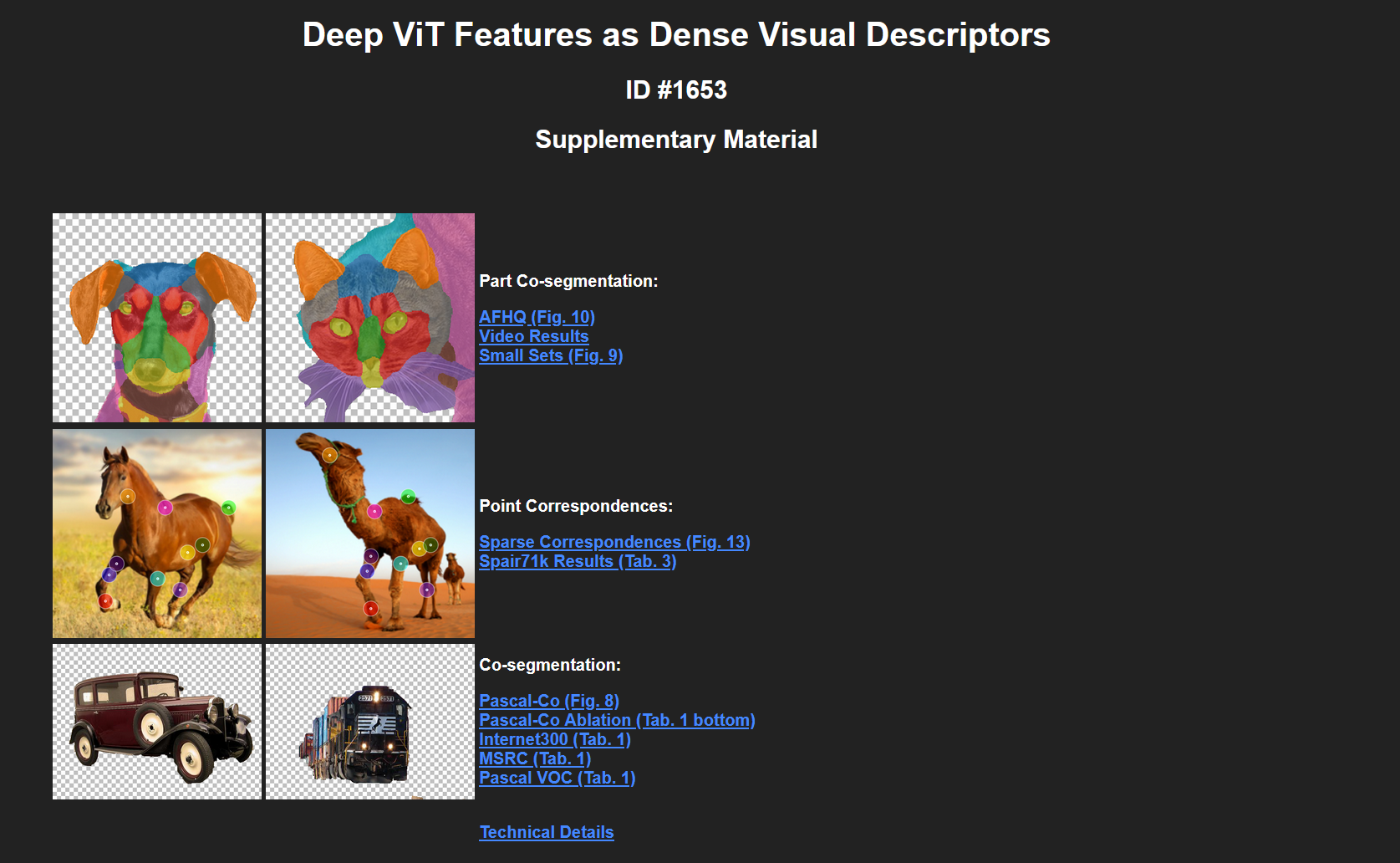

Part Co-segmentation examples

We apply clustering on Deep ViT spatial features to co-segment common objects among a set of images, and then further co-segment the common regions into parts. The parts remain consistent under variations in appearance, pose, scale, and under different yet related classes.The method can be applied on as little as a pair of images and as much as thousands of images.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

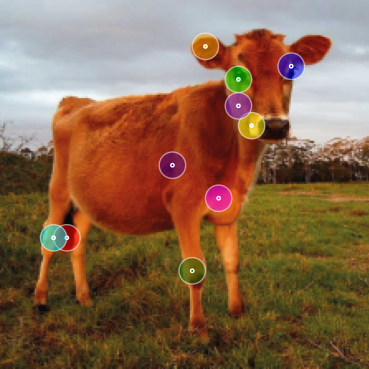

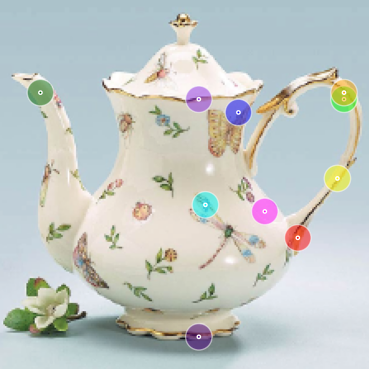

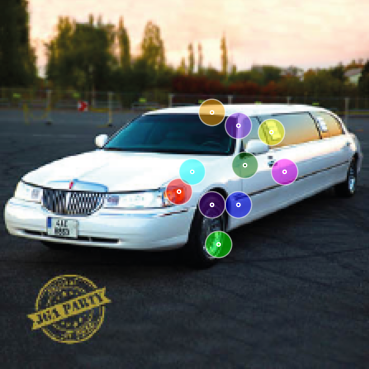

Point Correpondences examples

We leverage Deep ViT features to automatically detect semantically corresponding points between images from different classes, under significant variations in appearance, pose and scale.  |

|

|

|

|

|

|

|

|

Video Part Co-segmentation examples

We apply our part co-segmentation method on a collection of frames instead of a set of images, to recieve temporally consistent part co-segmentation. No temporal information is used.| Original Video | Part Co-segmentation |

|---|---|

Paper

|

Deep ViT Features as Dense Visual Descriptors |

Supplementary Material

|

Code

|

[code] |

Bibtex

Acknowledgments

We thank Miki Rubinstein, Meirav Galun, Niv Haim and Kfir Aberman for their useful comments.